AI in healthcare: what are the risks for for the NHS?

BBC

BBCAt a time when there are more than seven million patients on the NHS waiting list in England and around 100,000 staff vacancies, artificial intelligence could revolutionise the health service by improving patient care and freeing up staff time.

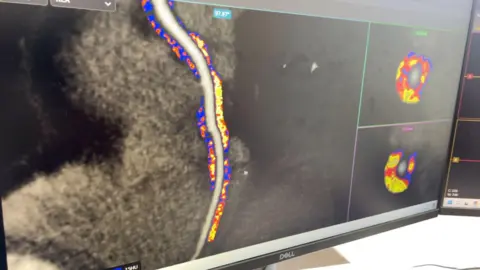

Its uses are varied – from spotting risk factors in a bid to help prevent chronic conditions such as heart attacks, strokes and diabetes – to assisting clinicians by analysing scans and x-rays to speed up diagnosis.

The technology is also maximising productivity by carrying out routine administrative tasks from automated voice assistants to scheduling appointments and capturing doctors’ consultation notes.

‘Transformative’

Generative AI – a type of artificial intelligence that can produce various types of content, including text and images – will be transformative for patient outcomes, according to Sir John Bell, a senior government advisor on life sciences.

Sir John is president of the Ellinson Institute of Technology in Oxford – a major new research and development facility investigating global issues, including the use of AI in healthcare.

He says generative AI will improve the accuracy of diagnostic scans and generate forecasts of patient outcomes under different medical interventions, leading to more informed, personalised treatment decisions.

But he warns researchers should not work in isolation, instead innovation should be shared fairly around the country to avoid some communities missing out.

“To achieve these benefits the NHS must unlock the enormous value currently trapped within data silos, to do good while safeguarding against harm,” Sir John says.

“Allowing AI access to all the data, within safe and secure research environments, will improve the representativeness, accuracy and equality of AI tools to benefit all walks of society, reducing the financial and economic burden of running a world-leading National Health Service and leading to a healthier nation.”

Ellinson Institute of Technology

Ellinson Institute of Technology‘Mitigate risks’

AI opens up a world of possibilities, but it brings risks and challenges too, like maintaining accuracy. Results still need to be verified by trained staff.

The government is currently evaluating generative AI for use in the NHS – one issue is that it can sometimes “hallucinate” and generate content that is not substantiated.

Dr Caroline Green, from the Institute for Ethics in AI at the University of Oxford, is aware of some health and care staff using models like ChatGPT to search for advice.

“It is important that people using these tools are properly trained in doing so, meaning they understand and know how to mitigate risks from technological limitations… such as the possibility for wrong information being given,” she says.

She feels it is important to engage people working in health and social care, patients and other organisations early in the development of generative AI and to keep on assessing any impacts with them to build trust.

Dr Green says some patients have decided to deregister from their GPs over the fear of how AI may be used in their healthcare and how their private information may be shared.

“This of course means that these individuals may not receive the healthcare they may need in the future and fall through the cracks,” she says.

Then there is the risk of bias. AI models may be trained on datasets that might not reflect the populations they will be applied to, exacerbating health inequalities based on things like gender or ethnicity.

Therefore, regulation is key. It needs to keep patients safe and protect their personal data, whilst at the same time increasing capacity to keep up with developments and allow AI to evolve and learn on the job.

AI-powered medical devices are tightly regulated by the The Medicines and Healthcare products Regulatory Agency (MHRA).

The Health Foundation think tank recently published a six-point national strategy to ensure AI tools are rolled out fairly and regulation is updated.

Nell Thornton, a senior improvement analyst at Health Foundation, says: “There are so many of these models coming through the system that it’s difficult to assess them quickly enough.

“That’s where we need support around the capacity of the system to regulate these things and we also need some clarity on some of the challenges that will come from the quirkiness of generative AI systems and what additional regulation they might need.”

Dr Paul Campbell, MHRA Head of Software and AI, says: “As a regulator, we must balance appropriate oversight to protect patient safety with the agility needed to respond to the particular challenges presented by these products to ensure we continue to be an enabler for innovation.”

The Department of Health and Social Care says the new Labour government will “harness the power of AI” by purchasing new AI-enabled scanners to diagnose patients earlier and treat them faster.

While few can deny the transformative effect AI is having within healthcare, there are challenges to overcome, not least that NHS staff need the confidence to use it and patients must be able to trust it.

Follow BBC South on Facebook, X (Twitter), or Instagram. Send your story ideas to south.newsonline@bbc.co.uk or via WhatsApp on 0808 100 2240.